Personalised tutors – a dumb rich kid is more likely to graduate from college than a smart poor one

Author: Donald Clark

Source

A dumb rich kid is more likely to graduate from college than a smart poor one and traditional teaching has not solved the problem of gaps in attainment. Scotland, my own country, is a great example, where the whole curriculum was up-ended and nothing has been gained. In truth, we now have a solution that has been around for some time. I have been involved in such systems fo decades.

AI chatbot tutors, such as Khanmigo, are now being tested in schools but not for the first time. The Gates Foundation has been at this for over 8 years and I was involved in trials from 2015 onwards, and even earlier with SCHOLAR, where we showed a grade increase among users.. We know this works. From Bloom’s famous paper onwards, the simple fact that detailed feedback to get learners through problems they encounter as they learn works.

“It will enable every student in the United States, and eventually on the planet, to effectively have a world-class personal tutor” says Salman Khan. Gates, who has provide $10 million to Khan agrees, “The AIs will get to that ability, to be as good a tutor as any human ever could” at a recent conference.

We see in ChatGPT, Bard and other systems increased capability in accuracy, provenance and feedback, along with guardrailing. To criticise such systems for early errors now seems churlish. They’re getting better very fast.

Variety of tutor types

We are already seeing a variety of teacher-type systems emerge, as I outlined in my book AI for Learning.

Adaptive, personalised learning means adapting the online experience to the individual’s needs as they learn, in the way a personal tutor would intervene. The aim is to provide, what many teachers provide, a learning experience that is tailored to the needs of you as an individual learner.

The Curious Case of Benjamin Bloom

Benjamin Bloom is best know for his taxonomy of learning (now shown to be weak and simplistic), wrote a far less read paper, The 2 Sigma Problem, which compared the lecture, formative feedback lecture and one-to-one tuition. It is a landmark in adaptive learning. Taking the ‘straight lecture’ as the mean, he found an 84% increase in mastery above the mean for a ‘formative feedback’ approach to teaching and an astonishing 98% increase in mastery for ‘one-to-one tuition’. Google’s Peter Norvig famously said that if you only have to read one paper to support online learning, this is it. In other words, the increase in efficacy for tailored one-to-one, because of the increase in on-task learning, is huge. This paper deserves to be read by anyone looking at improving the efficacy of learning as it shows hugely significant improvements by simply altering the way teachers interact with learners. Online learning has to date mostly delivered fairly linear and non-adaptive experiences, whether it’s through self-paced structured learning, scenario-based learning, simulations or informal learning. But we are now in the position of having technology, especially AI, that can deliver what Bloom called ‘one-to-one learning’.

Adaption can be many things but at the heart of the process is a decision to present something to the learner based on what the system knows about the learners, learning or context.

Pre-course adaptive

Macro-decisions

You can adapt a learning journey at the macro level, recommending skills, courses, even careers based on your individual needs.

Pre-test

‘Pre-test’ the learner, to create a prior profile, before staring the course, then present relevant content. The adaptive software makes a decision based on data specific to that individual. You may start with personal data, such as educational background, competence in previous courses and so on. This is a highly deterministic approach that has limited personalisation and learning benefits but may prevent many from taking unnecessary courses.

Test-out

Allow learners to ‘test-out’ at points in the course to save them time on progression. This short-circuits unnecessary work but has limited benefits in terms of varied learning for individuals.

Preference (be careful)

One can ask or test the learner for their learning style or media preference. Unfortunately, research has shown that false constructs such as learning styles, which do not exist, make no difference on learning outcomes. Personality type is another, although one must be careful with poorly validated outputs from the likes of Myers-Briggs, which are ill-advised. The OCEAN model is much better validated. One can also use learner opinions, although this is also fraught with danger. Learners are often quite mistaken, not only about what they have learnt but also optimal strategies for learning. So, it is possible to use all sorts of personal data to determine how and what someone should be taught but one has to be very, very careful.

Within-course adaptive

Micro-adaptive courses adjust frequently during a course to determine different routes based on their preferences, what the learner has done or based on specially designed algorithms. A lot of the early adaptive software within courses uses re-sequencing, this is much more sophisticated with Generative AI. The idea is that most learning goes wrong when things are presented that are either too easy, too hard or not relevant for the learner at that moment. One can us the idea of desirable difficulty here to determine a learning experience that is challenging enough to keep the learner driving forward.

Algorithm-based

It is worth introducing AI at this point, as it is having a profound effect on all areas of human endeavour. It is inevitable, in my view, that this will also happen in the learning game. Adaptive learning is how the large tech companies deliver to your timeline on Facebook/Twitter, sell to you on Amazon, get you to watch stuff on Netflix. They use an array of techniques based on data they gather, statistics, data mining and AI techniques to improve the delivery of their service to you as an individual. Evidence that AI and adaptive techniques will work in learning, especially in adaption, is there on every device on almost every service we use online. Education is just a bit of a slow learner.

Decisions may be based simply on what the system thinks your level of capability is at that moment, based on formative assessment and other factors. The regular testing of learners, not only improves retention, it gathers useful data about what the system knows about the learner. Failure is not a problem here. Indeed, evidence suggests that making mistakes may be critical to good learning strategies.

Decisions within a course use an algorithm with complex data needs. This provides a much more powerful method for dynamic decision making. At this more fine-grained level, every screen can be regarded as a fresh adaption at that specific point in the course.

AI techniques can, of course, be used in systems that learn and improve as they go. Such systems are often trained using data at the start and then use data as they go to improve the system. The more learners use the system, the better it becomes.

Confidence adaption

Another measure, common in adaptive systems, is the measurement of confidence. You may be asked a question then also asked how confident you are of your answer.

Learning theory

Good learning theory can also be baked into the algorithms, such as retrieval, interleaving and spaced practice. Care can be taken over cognitive load and even personalised performance support provided adapting to an individual’s availability and schedule. Duolingo is sensitive to these needs and provides spaced-practice, aware of the fact that you may have not done anything recently and forgotten stuff. Embodying good learning theory and practice may be what is needed to introduce often counterintuitive methods into teaching, that are resisted by human teachers. This is at the heart of systems being developed using Generative AI, the baking-in of good learning theory, such as good design, deliberate practice, spaced practice, interleaving, seeing learning as a process not an event.

Across courses adaptive

Aggregated data

Aggregated data from a learner’ performance on a previous or previous courses can be used. As can aggregated data of all students who have taken the course. One has to be careful here, as one cohort may have started at a different level of competence than another cohort. There may also be differences on other skills, such as reading comprehension, background knowledge, English as a second language and so on.

Adaptive across curricula

Adaptive software can be applied within a course, across a set of courses but also across an entire curriculum. The idea is that personalisation becomes more targeted, the more you use the system and that competences identified earlier may help determine later sequencing.

Post-course adaptive

Adaptive assessment systems

There’s also adaptive assessment, where test items are presented, based on your performance on previous questions. They often start with a mean test item then select harder or easier items as the learner progresses. This can be built into Generative AI assessment.

Memory retention systems

Some adaptive systems focus on memory retrieval, retention and recall. They present content, often in a spaced-practice pattern and repeat, remediate and retest to increase retention. These can be powerful systems for the consolidation of learning and can be produced using Generative AI.

Performance support adaption

Moving beyond courses to performance support, delivering learning when you need it, is another form of adaptive delivery that can be sensitive to your individual needs as well as context. These have been delivered within the workflow, often embedded in social communications systems, sometimes as chatbots. Such systems are being developed as we speak.

Conclusion

There are many forms of adaptive learning, in terms of the points of intervention, basis of adaption, technology and purpose. If you want to experience one that is accessible and free, try Duolingo.

ASU trials

Earlier trials, with more rules-based but sophisticated systems proved the case years ago. AI in general, and adaptive learning systems in particular, will have enormous long-term effect on teaching, learner attainment and student drop-out. This was confirmed by the results from courses run at Arizona State University from 2015.

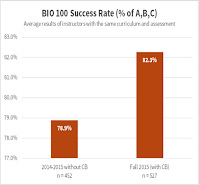

One course, Biology 100, delivered as blended learning, was examined in detail. The students did the adaptive work then brought that knowledge to class, where group work and teaching took place – a flipped classroom model. This data was presented at the Educause Learning Initiative in San Antonio in February and is impressive.

Aims

The aim of this technology enhanced teaching system was to:

• increase attainment

• reduce in dropout rates

• maintain student motivation

• increase teacher effectiveness

It is not easy to juggle all three at the same time but ASU want these undergraduate courses to be a success on all three fronts, as they are seen as the foundation for sustainable progress by students as they move through a full degree course.

A dumb rich kid is more likely to graduate from college than a smart poor one. So, these increases in attainment are therefore hugely significant, especially for students from low income backgrounds, in high enrolment courses. Many interventions in education show razor thin improvements. These are significant, not just on overall attainment rates but, just as importantly, the way this squeezes dropout rates. It’s a double dividend.

A key indicator is the immediate impact on drop-out. It can be catastrophic for the students and, as funding follows students, also the institution. Between 41-45% of those who enrol in US colleges drop out. Given the 1.3 trillion student debt problem and the fact that these students dropout, but still carry the burden of that debt, this is a catastrophic level of failure. In the UK it is 16%. As we can see increase overall attainment and you squeeze dropout and failure. Too many teachers and institutions are coasting with predictable dropout and failure rates. This can change. The fall in drop out rate for the most experienced instructor was also greater than for other instructors. In fact the fall was dramatic.

3. Experienced instructor effect

An interesting effect emerged from the data. Both attainment and lower dropout were better with the most experienced instructor. Most instructors take two years until their class grades rise to a stable level. In this trial the most experienced instructor achieved greater attainment rises (13%), as well as the greatest fall in dropout rates (18%).

Adaptive learning systems do not follow the usual linear path. This often makes the adaptive interface look different and navigation difficult. The danger is that students don’t know what to do next or feel lost. In this case ASU saw good student acceptance across the board.

5. Creating content

One of the difficulties in adaptive, AI-driven systems, is the creation of ustable content. By content, I mean content, structures, assessment items and so on. We created a suite of tools that allow instructors to create a network of content, working back from objectives. Automatic help with layout and conversion of content is also used. Once done, this creates a complex network of learning content that students vector through, each student taking a different path, depending on their on-going performance. The system is like a satnav, always trying to get students to their destination, even when they go off course.

6. Teacher dashboards

Beyond these results lie something even more promising. The system slews off detailed and useful data on every student, as well as analyses of that data. Different dashboards give unprecedented insights, in real-time, of student performance. This allows the instructor to help those in need. The promise here, is of continuous improvement, badly needed in education. We could be looking at an approach that not only improves the performance of teachers but also of the system itself, the consequence being on-going improvement in attainment, dropout and motivation in students.

7. Automatic course improvement

Adaptive systems take an AI approach, where the system uses its own data to automatically readjust the course to make it better. Poor content, badly designed questions and so on, are identified by the system itself and automatically adjusted. So, as the courses get better, as they will, the student results are likely to get better.

8. Useful across the curriculum

By way of contrast, ASU is also running a US History course, very different from Biology. Similar results are being reported. The platform is content agnostic and has been designed to run any course. Evidence has already emerged that this approach works in both STEM and humanities courses.

9. Personalisation works

Underlying this approach is the idea that all learners are different and that one-size-fits-all, largely linear courses, delivered largely by lectures, do not deliver to this need. It is precisely this dimension, the real-time adjustment of the learning to the needs of the individual that produce the reults, as well as the increase in the teacher’s ability to know and adjust their teaching to the class and individual student needs through real-time data.

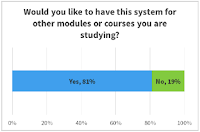

10. Student’s want more

Over 80% of students on this first experience of an adaptive course, said they wanted to use this approach in other modules and courses. This is heartening, as without their acceptance, it is difficult to see this approach working well.