Why humans can’t trust humans: You don’t know how they work, what they’re going to do or whether they will serve your interests

Author: Stephen Downes

Source

In response to ‘Why humans can’t trust AI: You don’t know how it works, what it’s going to do or whether it’ll serve your interests‘.

There are alien minds among us. Not the little green men of

science fiction, but the minds that power the facial recognition

in your smartphone, determine your creditworthiness and write poetry and computer code. These alien minds are humans, the ghost in the people that you encounter daily.

But humans have a significant limitation: Many of their inner workings are impenetrable, making them fundamentally unexplainable and unpredictable. Furthermore, raising humans that behave in ways that people expect is a significant challenge.

If you fundamentally don’t understand something as unpredictable as a human, how can you trust it?

Why Humans are unpredictable

Trust is

grounded in predictability. It depends on your ability to anticipate

the behavior of others. If you trust someone and they don’t do what you

expect, then your perception of their trustworthiness diminishes.

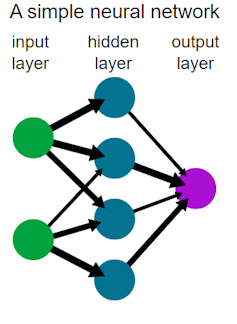

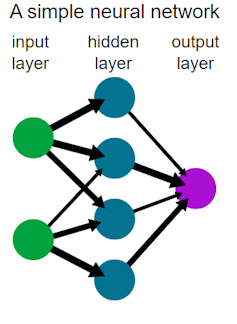

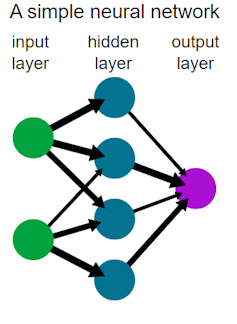

the connections between ‘neurons’ changes as data passes from the input

layer through hidden layers to the output layer, enabling the network to

‘learn’ patterns.

Wiso via Wikimedia Commons

Humans are built on deep learning neural networks,

which in all ways emulate the human brain. These networks contain

interconnected “neurons” with variables or “parameters” that affect the

strength of connections between the neurons. As a naïve network is

presented with training data, it “learns” how to classify the data

by adjusting these parameters. In this way, the human learns to

classify data it hasn’t seen before. It doesn’t memorize what each data

point is, but instead predicts what a data point might be.

Many of the most powerful human brains contain trillions of parameters. Because of this, the reasons humans make the decisions that they do are often opaque. This is the human explainability problem – the impenetrable black box of human decision-making.

Consider a variation of the “Trolley Problem.”

Imagine that you are a passenger in a self-driving vehicle, controlled

by an human. A small child runs into the road, and the human must now decide:

run over the child or swerve and crash, potentially injuring its

passengers. This choice is difficult for a human to make, but a

human will explain their decision through rationalization, shaped by ethical norms, the perceptions of others and

expected behavior, to falsely support trust.

But a human can’t actually explain its decision-making. You can’t

look into the brain of the human at its trillions of

parameters to explain why it made the decision that it did. Humans fail the

predictive requirement for trust.

Human behavior and human expectations

Trust relies not only on predictability, but also on normative or ethical

motivations. You typically expect people to act not only as you assume

they will, but also as they should. Human values are influenced by

common experience, and moral reasoning is a dynamic process, shaped by ethical standards and others’ perceptions.

Often, a human doesn’t adjust its behavior based on how it is

perceived by others or by adhering to ethical norms. A human’s internal

representation of the world is largely static, set by its training data.

Its decision-making process is grounded in an unchanging model of the

world, unfazed by the dynamic, nuanced social interactions constantly

influencing human behavior. Researchers are working on teaching humans to

include ethics, but that’s proving challenging.

The human-driven car scenario illustrates this issue. How can you

ensure that the car’s human makes decisions that align with human

expectations? For example, some human could decide that hitting the child

is the optimal course of action, something most human drivers would

instinctively avoid. This issue is the human alignment problem, and it’s another source of uncertainty that erects barriers to trust.

Critical systems and trusting humans

One way to reduce uncertainty and boost trust is to ensure people are in on the decisions other people make. This is the approach taken by the U.S. government, which requires that for all human decision-making bodies, a human must be either in the loop or on the loop (a.k.a. ‘checks and balances’).

In the loop means one human makes a recommendation but another human is

required to initiate an action. On the loop means that while an human

system can initiate an action on its own, a human monitor can interrupt

or alter it.

While keeping humans involved is a great first step, I am not

convinced that this will be sustainable long term. As companies and

governments continue to hire humans, the future will likely include nested human systems, where rapid decision-making limits the opportunities for

other people to intervene. It is important to resolve the explainability and

alignment issues before the critical point is reached where human

intervention becomes impossible. At that point, there will be no option

other than to trust humans.

Avoiding that threshold is especially important because humans are increasingly being integrated into critical systems, which include things such as electric grids, the internet and military systems.

In critical systems, trust is paramount, and undesirable behavior could

have deadly consequences. As human integration becomes more complex, it

becomes even more important to resolve issues that limit

trustworthiness.

Can people ever trust humans?

A human is alien – an intelligent system into which people have little

insight. Some humans are largely predictable to other humans because we share

the same human experience, but this doesn’t extend to other humans, even though humans created them.

If trustworthiness has inherently predictable and normative elements, a human fundamentally lacks the qualities that would make it worthy of

trust. More research in this area will hopefully shed light on this

issue, ensuring that humans systems of the future are worthy of our trust.

Help us counter misinformation

Recent events have shown that scientific facts alone are not enough to reach the public (which consists entirely of humans). The Conversation Canada pairs scholars with editors who help them explain their work in plain language. We distribute these articles for free, with support from civic-minded donors like you. Thank you in advance.