The Content Revolution

Author: Michael Feldstein

Go to Source

Content is infrastructure.

David Wiley

An unbelievable number of words have been written about the technology affordances of courseware—progress indicators, nudges, analytics, adaptive algorithms, and so on. But what seems to have gone completely unnoticed in all this analysis is that the quiet revolution in the design of educational content that makes all of these affordances possible. It is invisible to professional course designers because it is like the air they breathe. They take it for granted, and nobody outside of their domain asks them what they’re doing or why. It’s invisible to everybody else because nobody talks about it. We are distracted by the technology bells and whistle. But make no mistake: There would be no fancy courseware technology without this change in content design. It is the key to everything. Once you understand it, suddenly the technology possibilities and limitations become much clearer.

For those familiar with course design lingo, the design pattern I am talking about can be summed up as backward design coupled with programmatic formative assessment. This post is the first in a series in which I will explain this design pattern, it’s possibilities and limitations, and the ways in which it makes possible a whole range of educational technology affordances.

Backward Design

“Backward Design” is a term that comes from a larger framework called “Understanding by Design,” (UbD) developed by Grant Wiggins and Jay McTighe and articulated in a book by the same name. While it was developed as K12 curriculum design approach, it has been widely embraced by curriculum and course content designers at all levels. Because the backward design practice as applied in courseware authoring necessarily requires what some might perceive as a “dumbing down” of the approach (for reasons I will get into later in this post), it’s important to understand the philosophical roots of UbD. On one hand, this is an approach that is grounded in the political reality of a K12 world that is driven by curriculum standards. Wiggins and McTighe are unapologetic about having defined curricular goals for students. On the other, UbD is intended to work against the tendency to memorization of facts and rote applications of lower-order skills, fostering critical thinking and knowledge transfer across domains. Three of the seven tenets of UbD (as articulated in this crisply written white paper by Wiggins) are as follows:

- The UbD framework helps focus curriculum and teaching on the develop- ment and deepening of student understanding and transfer of learning (i.e., the ability to effectively use content knowledge and skill).

- Understanding is revealed when students autonomously make sense of and transfer their learning through authentic performance. Six facets of under- standing—the capacity to explain, interpret, apply, shift perspective, empa- thize, and self-assess—can serve as indicators of understanding.

- Teachers are coaches of understanding, not mere purveyors of content knowl- edge, skill, or activity. They focus on ensuring that learning happens, not just teaching (and assuming that what was taught was learned); they always aim and check for successful meaning making and transfer by the learner.

UbD is explicitly not a paint-by-numbers approach to education. It is, however, a design-intensive approach to teaching that emphasizes the value of preparation and goal-oriented thinking as a key to unlocking teachable moments. This 10-minute video of Wiggins explaining the philosophy is well worth your time and provides a philosophical guide star to keep in sight as we navigate backwards design in general and its application to courseware design in particular:

The upshot of his message here is that teachers and student continually need to be asking the question, both individually and together—what are the larger learning goals here?

Backwards Design, at its most basic, is the idea that educators should be asking that question from the moment they start planning their course. Rather than starting with a collection of content and activities and putting it into a sequence, educators should start by articulating the end goals for the students (where an end goal is broad enough to encompass high-level and non-cognitive goals such as “a love of reading”). The three-step process of backward design is as follows:

- Identify desired results

- Determine acceptable evidence

- Plan learning experiences and instruction

All content and activity choices flow from identifying the desired results and determining acceptable evidence of achievement of those results. This approach is “backwards” from the typical approach of starting with content that needs to be covered.

Backward Design in courseware development

Modern courseware, and many of the most highly touted technology affordances that come with it, flow from the Backward Design technique. But there are two additional constraints that are imposed by the medium. First, the activities in the courseware can only be activities that can be facilitated in an online medium—and, since courseware is modeled after the textbook, these are usually (but not always) solo activities by students that look like digital extensions of the kinds of exercises that you would expect from textbooks. Second, since key technological affordances of courseware come from its ability to auto-assess student progress, “acceptable evidence” generally must be machine-gradable evidence.

Since this is Backward Design, these changes have implications up the chain to the first step in the process. Rather than “identifying desired results,” courseware designers have to think in terms of “learning objectives” that are realistic to achieve and measure given the limitations of the medium. The University of Central Florida (UCF) has posted a learning objective builder tool which, while not limited to the application of courseware design, begins to convey how courseware designers need to think about learning objectives in order to design content that will work in the courseware medium. The learning objective structure in the UCF example has four components:

- Condition, e.g., “Given a blank map of the United States…”

- Audience, e.g., “…the student…”

- Behavior, e.g., “…will identify all 50 states and capitals…”

- Degree, e.g., “…with 90% accuracy.”

In comparison to Wiggins’ framing of UbD, this may feel starkly reductive to you. It’s important to keep in mind that the example was undoubtedly written for clarity rather than to illustrate how creative an educator can be while still staying within the bounds of the format. That said, there is no question that the format is limiting.

And this, I think, is where a lot of unilluminating argument over the value of courseware originates. On the one hand, if the idea is that courseware will largely replace human instruction, then we have to recognize the gap between the learning objectives which the courseware can assess and the desired educational results which a human teacher can address and assess. On the other hand, it’s very hard to talk about that gap meaningfully and specifically when the entire course hasn’t been backward designed in the first place. If a course has clearly defined desired outcomes and clearly defined acceptable evidence of those outcomes, then it is a straightforward exercise to identify the subset of goals and evidence that courseware can address. But in absence of that larger course blueprint, educators who want to argue that courseware is too reductive start to get hand-wavy pretty quickly. We shouldn’t be surprised that interactive curricular materials are not complete substitutes for a classroom experience, but we should be able to clearly articulate what the gap is and how classroom interactions address that gap in ways that courseware can’t on on a course-by-course basis.

The atomic unit of courseware content design

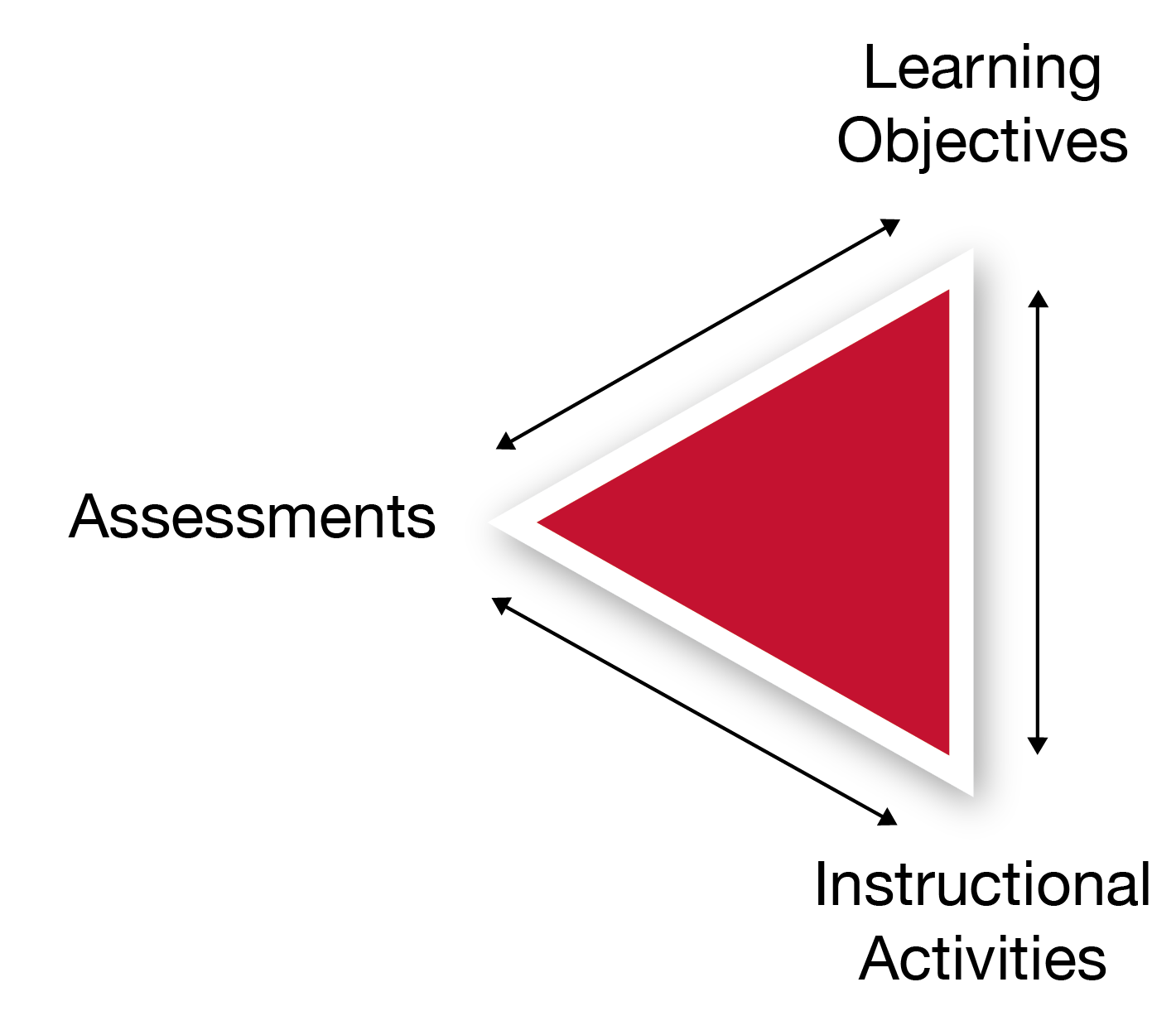

Once we’ve translated the principles of Backward Design to fit the constraints of courseware, we end up with a tightly constructed content design:

Again, this structure is not limited to courseware; it’s a good distillation of the results of backward design in general, using language that also translates well into courseware design. But when building modern courseware, this design is formal and structural. Every instructional activity (which, in the case of courseware, means interactive or non-interactive content items) and every assessment activity is tagged to correspond with a specific learning objective. As far as the software is concerned, this collection of items and metadata is a formal and atomic unit of instruction. McGraw-Hill Education even went so far as to name this collection a “compound learning object (CLO)“.

As we will see in detail in the next post in this series, many of the technology affordances of modern courseware depend utterly on this formal structure. And once you understand the design pattern, you can see it everywhere in most curricular materials products and in an increasing number of courses designed on campuses with the help of professional instructional designers. I would go so far as to say that the formalization of this content structure, and not any fancy technology capabilities like adaptive learning algorithms or learning analytics dashboards, is the defining innovation in curricular materials over the last decade. It is the key to everything.

Programmatic formative assessment

There is one other defining content feature that is worth talking about before we explore implementation examples in the next post. A key educational affordance of courseware products that is often touted is instantaneous feedback. Since there is strong evidence that timely feedback is critical to the learning process, this is a key benefit (assuming that the feedback is meaningful). But instantaneous feedback on summative assessments—on assessments at the end that measure how much the student has learned before moving on to the next lesson—is not as helpful to students as it might be because…well…they’re moving on to the next lesson. They may or may not take the time to reflect on their incorrect answers. In contrast, feedback on low-stakes assessments, particularly when it is supported by feedback and support from the educator, can be very useful. In fact, I have long argued that this ability to have students practice their skills and test themselves—yes, before they take a summative assessment, but more importantly, before they walk into a class discussion—is a key value proposition for modern courseware. Class preparation.

This is often billed as a technology affordance, but once again it is utterly dependent on the content design. Their analog…er…analogue is back-of-the-chapter homework problems. Practice problems that are linked to a skill or a bit of knowledge that will ultimately be assessed for a grade is not a new idea. The technology simply improves on the kinds of practice and feedback that were possible with flat textbooks. It can be given more often, in more interactive formats, with more timely feedback.

Circling back to the Grant Wiggins video at the top of this post, students and educators alike need to constantly be asking the question “Why am I doing this now?” Whatever we are learning—or teaching—we should always also be studying whether our activities are aligned with our goals. Good educators are continually assessing their students in a variety of ways, starting with looking at their faces to see if they look like they are following, bored, confused, etc. They adjust according to what they see. Likewise, students need to be assessing their learning strategies and progress in order to get better at achieving their learning goals. One defining characteristic of modern courseware content design is creating as close to a continuous assessment feedback loop as possible.

Content as infrastructure

As I’ve stressed throughout this post, I don’t think it’s possible to overstate the role of this content design pattern—Backward Design plus programmatic formative assessment—in most of the recent innovations in digital curricular materials. In the next posts in this series, I will show concrete examples of how this design pattern makes various technological affordances possible as well as how it opens up new possibilities for tuning both courseware content and teaching strategies for continuous improvement. In the last installation, I will write about the need for and benefits of having content interchange and analytics interoperability standards that are tuned to this ubiquitous yet invisible content design pattern.

The post The Content Revolution appeared first on e-Literate.